| Ben Mildenhall1* | Jon Barron2 | Jiawen Chen2 | Dillon Sharlet2 | Ren Ng1 | Robert Carroll2 |

| 1UC Berkeley 2Google |

| * Work done while interning at Google. |

|

|

Abstract

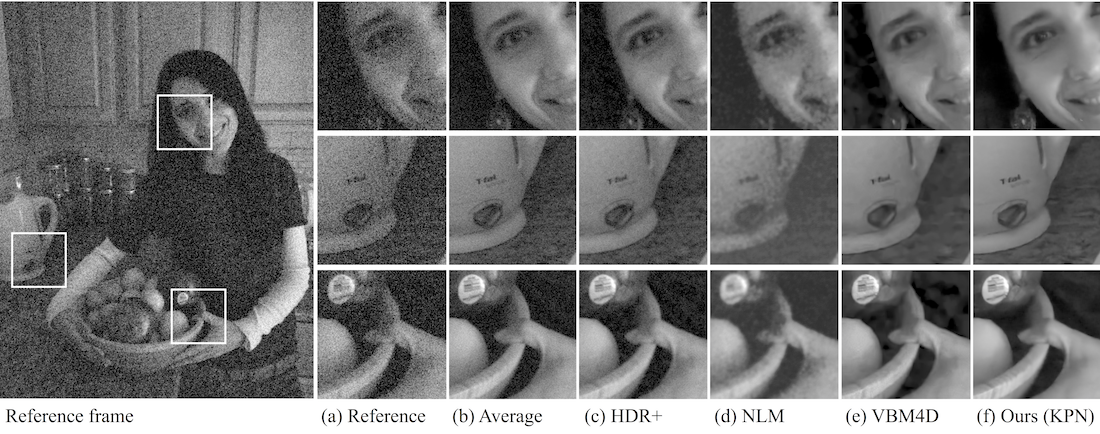

We present a technique for jointly denoising bursts of images taken from a handheld camera. In particular, we propose a convolutional neural network architecture for predicting spatially varying kernels that can both align and denoise frames, a synthetic data generation approach based on a realistic noise formation model, and an optimization guided by an annealed loss function to avoid undesirable local minima. Our model matches or outperforms the state-of-the-art across a wide range of noise levels on both real and synthetic data. @inproceedings{mildenhall2018kpn, author = {Mildenhall, Ben and Barron, Jonathan T and Chen, Jiawen and Sharlet, Dillon and Ng, Ren and Carroll, Robert}, title = {Burst Denoising with Kernel Prediction Networks}, booktitle = {IEEE Conference on Computer Vision and Pattern Recognition (CVPR)}, year = {2018} }

Links

|